Risk Translation & Local Communication Practices

How about, for example, expanding the UN-SPIDER Knowledge Portal to include a new category called “Risk Translation & Local Communication Practices” in order to build a semantic bridge between technical terms and everyday logic?

The Sendai Framework goals emphasize “access to early warning systems for all.” But access means not only technical availability, but also comprehensibility and trust. So we should ask ourselves: How can we ensure that this data is not only received, but also truly understood and implemented? We should not run the risk of ineffectiveness despite technical excellence, but always try to remain connected.

This also requires the creation of a “translation layer.”

- A network of local intermediaries who translate data culturally

- The development of a toolkit for culturally sensitive risk communication

- The integration of social science findings into the system architecture

- The promotion of community-based early warning systems that use UN-SPIDER data, for example, but are locally anchored

I myself live in the middle of Europe, in a large city that has already been hit by HQ100 floods. Let's take a little “dive” into this world.

What many people associate with “HQ100,” for example, and why that can be problematic.

The term “HQ100” (flood with a statistical recurrence interval of 100 years) is intuitively misinterpreted by many people.

HQ100 stands for the statistically calculated discharge that is reached or exceeded on average once every 100 years at a specific section of a watercourse. This means:

- HQ100 is not a fixed water level or discharge value, but rather a locally calculated threshold value derived from historical measurement data, hydrological models and regional conditions.

An HQ100 in Passau (Danube, Inn, Ilz) has completely different hydrological characteristics than an HQ100 in Cologne (Rhine) or in Dresden or Magdeburg (Elbe), because:

- the catchment areas are different

- the river characteristics vary, e.g., flow velocity, bed profile

- the climatic conditions and heavy rainfall patterns differ

- urban sealing and retention capacities are differen

Therefore, an HQ100 cannot be transferred from one location to another (it is always location-specific).

- “It only happens once every 100 years” – statistics are misunderstood as a promise, i.e., many people intuitively interpret ‘HQ100’ as “once in 100 years,” i.e., as a temporal “promise” rather than a statement of probability.

- “We've had it now, so we're safe for 100 years” – if extreme flooding occurs again within a few years, the feeling arises that “the experts were wrong” or “the models are unreliable.”

- “If it happens twice in 30 years, the forecast was wrong” – confidence dwindles because the expectation of “100 years of peace” is disappointed, even though the statistics were correct. Those who do not understand the language then feel excluded and believe that the warning is not intended for them.

- The reality they experience contradicts the logic of the model, i.e., when those affected experience flooding several times within a few decades, they say, “This is not a once-in-a-century flood – it happens all the time!” The discrepancy between the model and their own experience then leads to mistrust of science. This means that willingness to take precautions declines because the models are perceived as “unrealistic.”

- Lack of perspective for action, i.e., “HQ100” says nothing about what really needs to be done here. It is only an abstract term without direct instructions for action. The necessary behavior does not occur because the warning has no concrete meaning. Communication remains confined to the expert community.

- Cognitive dissonance and refusal to protect, i.e., when people do not understand a warning, uncertainty arises. This uncertainty then often leads to repression or even defensiveness: “That doesn't affect me,” “They're exaggerating,” “I've already experienced that and it wasn't so bad.” Psychological defense mechanisms block precautionary measures because the communication is not emotionally relatable.

These misunderstandings then lead to:

- Loss of trust in authorities, models and warning systems, decline in acceptance of protective measures and investments

- The media and politicians also reinforce vague terms, because “flood of the century” sounds like a singular, historic event — not a statistical probability. The emotional impact overshadows the factual meaning. Politicians and the media sometimes refer to “HQ100” in general terms without saying: Where exactly was this value reached? Which levels are used as a reference? What uncertainties exist? The terms are taken out of context and thus become misleading. This is precisely why a structured, narrative, and visual translation of these terms is needed so that they can be understood, believed and implemented.

- Technical language creates exclusion instead of participation, because terms such as “HQ100,” “return period,” and “statistical extreme value analysis” are not intuitive, i.e., they are not understood because communication is not accessible — neither linguistically nor culturally.

- Local decision-makers, such as mayors and teachers, are unable to pass on the information.

- Lack of willingness to act: “Why should I prepare when it happens so rarely?” Preventive measures are perceived as unnecessary, early warnings may be ignored or ridiculed and evacuations are carried out too late or not at all.

- Distorted risk perception – Many then (perhaps) underestimate the real probability, i.e., risk awareness decreases, protective measures are perceived as exaggerated, or repetitions then lead to “confusion” and rejection along the lines of “Again? That can't be right!” We think in terms of experience, not probability and without semantic translation, statistics remain cognitively inaccessible.

Repetitions within 100 years and what they trigger

When an HQ100 event occurs multiple times within a few decades, e.g., the Danube in Passau: 1954, 2013, the following often occurs:

- Doubts about the quality of the model: “Is this even accurate?”

- Confusion about the statistics: “Was that an HQ100 or an HQ500?”

- Rejection of precautionary measures: “The experts say something different every time.”

And yet the statistics are correct, but not intuitively understandable. Because:

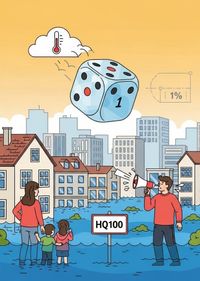

- HQ100 means: 1% probability per year

- This means that it could happen twice in ten years or not at all in 150 years.

What we need here is semantic risk communication. For early warning to be truly effective, HQ100 must be translated into understandable, culturally relevant and actionable messages.

Examples of possible translations

Technical term = everyday semantics

- HQ100 = A flood that statistically occurs once every 100 years, but could also happen again tomorrow.

- Recurrence period = How often you roll a 100 when rolling a die, i.e., the die is rolled again every year.

- Probability of return = 1% chance per year, i.e., it's like playing the lottery, only with “water.”

- HQ100+ = Stronger than what was previously considered extreme

Possible concrete communication formats, because the repetition of HQ100 events, for example, are not errors in the system, but errors in communication. If we do not translate HQ100 semantically and psychologically correctly, we lose valuable trust, understanding and willingness to take precautions among the population.

- Narrative marker: “The 2013 flood was stronger than the one in 1954, even though both were classified as HQ100.”

- Visualization: A cube with 100 sides, rolled again each year

- Voice messages in simple language: “Even though it has already happened, it can happen again. Statistics do not say ‘only once.’”

- Introduce comparative logic, i.e., “Like the weather: just because it rained yesterday doesn't mean it will stay dry tomorrow.”

Technology is only as powerful as the language it speaks.

Terms with high potential for misunderstanding - These terms are crucial interfaces between warning and action and often invisible stumbling blocks in the final mile of risk communication. If they are not understood, there will be no response, rendering even the best GeoAI system ineffective.

Each term should be structured and considered according to three criteria, i.e., semantic clarity (what does the term really mean?), emotional connectivity (how does the term feel to those affected?), and action orientation (what should I do specifically?). “Understandable rather than just technical” and that also includes a glossary with everyday language (“What it means,” “What it doesn't mean,” “What you can do”).

Technical term / Everyday translation / Typical misunderstanding / Suggestion

- HQ100 / HQ50 / HQ10 “Water level that statistically occurs every X years” “Only happens every 100 years – so not now” / Just because it’s rare doesn’t mean it won’t happen tomorrow.

- Centennial event “Extremely rare, but can happen anytime” “Not relevant to me – it hardly ever happens”

- Evacuation recommendation “Please leave the area – it’s dangerous” “It’s just a recommendation – I’d rather stay” / “Recommendation means: We’re giving you the chance to get out safely.”

- Lead time “How much time remains until danger” “I’ll wait – it’s not here yet”

- Model-based forecast “Prediction based on calculations” “Just theory – I don’t believe it” / “This isn’t theory – it’s math that can save lives.”

- Risk zone “Area with elevated danger” “I live there, but nothing has ever happened to me” / “If you live there, you need a plan – not panic.”

- Vulnerability “How susceptible people or places are” “I’m not weak – that’s about others” / “Vulnerability isn’t a flaw – it’s a measure of care needs.”

- Resilience “How well one can recover” “Sounds like a technical term – no idea what it means” / “Resilience is like a muscle – you can train it.”

- Critical infrastructure “Things we need to live: electricity, water, hospital” “Doesn’t concern me – I’m not in a hospital”

- Trigger value / Threshold “From here on, warnings or actions are issued” “I don’t see a difference – why the alarm now?”

- Hazard potential “How bad it could get” “Potential doesn’t mean it will happen”

- Damage scenario “What could happen, e.g. flooding” “Just a scenario – not real”

- Early warning system “System that warns of danger in time” “I didn’t get a warning – so no danger” / “If you hear it, it’s not too early – it’s just in time.”

- Risk communication “How danger is communicated” “That’s just media stuff – I don’t trust it”

- Self-protection measures “What I can do myself to stay safe” “I’d rather wait for help – I can’t do anything”

- Evacuation plan “Route and place to go in an emergency” “I don’t know any plan – so I’ll just stay here”

- Emergency supplies “What I need when everything fails” “I have electricity – why stock up?”

- Alert level Red / Orange / Yellow “How dangerous it currently is” “I don’t know what the colors mean”

- Impact-based forecast “Prediction with concrete consequences for me” “I don’t see any impact – so no danger”

- Verified information “Checked, authentic warning” “I read something else on social media”

- Rumors / Misinformation “Unverified statements that confuse” “I’d rather act on what I heard”

- Risk fatigue “I’m tired of constant warnings” “I don’t listen anymore – nothing ever happens” / “Warning fatigue is dangerous – it blinds you to real risk.”

- Protection refusal “I consciously ignore the warning” “I’ve had bad experiences – I don’t trust it” / “Trust doesn’t grow through force – but through understanding.”

- Behavioral uncertainty “I don’t know what to do” “I’ll wait – maybe it’s not that bad”

- Evacuation zone “Area that must be evacuated” “I’m just outside – so I’ll stay”

- Shelter-in-place “Stay where you are” “I thought I was supposed to leave – so what now?”

- Safe route “Path that isn’t flooded” “I don’t know the way – so I’ll stay”

- Displacement risk “People have to leave their homes” “That’s about others – I’m safe”

- Livelihood disruption “Work stops, e.g. no market, no fishing” “I can work again tomorrow – no problem”

- Drainage failure “Water can’t drain away” “That’s just a clogged drain – not dangerous”

- Road washout “Road has been washed away” “I’ll drive anyway – it’ll be fine”

- Bridge collapse risk “Bridge could collapse” “It looks stable – I’ll drive over it”

- Data latency “Information arrives too late” “I didn’t hear anything – so no danger”

- False alarm “Warning that turns out to be false” “I don’t believe any warnings anymore”

- Confidence level “How certain the forecast is” “If it’s not 100%, I don’t believe it”

- Scenario modeling “What if…?” “That’s just a thought experiment – not real”

- Geo-tagged report “Report with location data” “That’s just one person’s opinion – not relevant”

- Crowdsourced data “Information from all of us” “That’s unreliable – I only trust experts”

- Offline access “Information even without internet” “I had no signal – so no warning”

- No-regret measures “Actions that are always sensible” “I’d rather wait until it’s really bad”

- Preparedness level “How ready we are” “I don’t have a checklist – so I’m not prepared”

- Response capacity “How quickly we can help” “The fire department will come – I don’t need to do anything”

- Recovery timeline “How long it takes until things are okay again” “I thought it would be immediate – why is it taking so long?”

- Cultural memory “What our grandparents experienced” “It was different back then – that doesn’t happen today”

- Traditional knowledge “Old signs of danger” “That’s superstition – I only trust technology”

- Risk indicator “Sign of impending danger” “I don’t see anything – so everything’s fine”

- External data source “Data from outside, e.g. satellites” “That’s far away – not relevant to us”

- Validation “Confirmation from our people” “I’d rather wait for official sources”

- Ownership “Our data, our decision” “That’s the authorities’ job – I have nothing to do with it”

- Interoperability “Data fits together” “I don’t understand why that matters”

- Real-time data “Current information – right now” “I didn’t see anything – so nothing happened”

- Mitigation “Measures to reduce damage” “That’s the government’s job – not mine”

- Contingency plan “Plan B for emergencies” “I don’t have one – so I hope nothing happens”

- Flood extent “How far the water reaches” “I don’t see anything – so everything’s fine”

- Exposure “How close I am to the danger” “I’m not right by the river – so I’m safe”

- Adaptive capacity “How well I can adjust” “I’m not flexible – that’s about others”

- Backwater effect “Water backs up” “The river is flowing – what could happen?”

- Seasonal migration “People move because of weather” “That’s about nomads – not me”

- Hazard footprint “Traces of danger on the map” “I don’t see any – so nothing happened”

- Early impact zone “Place where danger strikes first” “I’m farther away – so I’m not affected”

Development and introduction of a “last mile simulator” – What happens if I misunderstand?

- Short scenarios: e.g., “I ignore the evacuation recommendation — what happens?”

- Visualization of consequences, e.g., loss of time, increased risk

I would like to consider the media/press sector separately here.

Media section

Why AI texts can exacerbate semantic problems!

- Automated reproduction instead of critical interpretation – that is, AI models trained on large volumes of text adopt terms like “centennial flood” from existing media content without questioning them. They reinforce existing language patterns, even when those are misleading or used excessively.

- Technical terms are often correctly defined, but not explained. Media texts frequently provide a formal definition of HQ100 – such as “statistically once every 100 years” – but no understandable translation into everyday language. This leads to false perceptions, because statistics are not intuitive. AI-generated texts tend to remain neutral and informative, but rarely provide concrete instructions for citizens. As a result, communication remains technical rather than action-oriented.

- It becomes problematic when media texts list multiple “centennial floods” in a single article, e.g. Opava (2024), Kamp (2002, 2013), Thaya (2006), Elbe (2002, 2013), and Ahrtal (2021). That’s at least six “centennial events” in just 22 years, and all within Central Europe. Without context regarding local gauge classifications or statistical uncertainty, the impression arises: “Centennial floods happen all the time – so the statistics must be wrong.” This overuse (semantic erosion) leads not only to conceptual vagueness but also to a loss of trust in the validity of hydrological models.

- When a media text states, “An HQ100 is an event that statistically occurs only once every hundred years,” that may be formally correct, but psychologically it is misleading, as it sounds like a temporal promise: “only once in 100 years.” It fails to explain that this refers to a 1% probability per year, and lacks an everyday translation, such as “like a 100-sided die – rolled anew each year.” Readers are left with a false understanding, which undermines trust.

- When AI-generated texts repeatedly mention “centennial floods” in media, without providing context about gauge locations, uncertainties, or recurrence probabilities, it results in inflationary usage. The term loses its meaning and becomes an emotional label.

- Climate change is often correctly cited as an amplifier (more moisture in the atmosphere, slow-moving low-pressure systems, and a meandering jet stream), but without a semantic bridge. The connection to risk perception is missing – that is, it’s not explained how this changes the statistical basis and why HQ100 today is no longer the same as it was 50 years ago. Readers learn “It’s getting worse,” but not “What does this mean for me personally?”

- Many texts often end with general statements, such as “protective measures are insufficient” or “warning systems need to work better.” But they lack a concrete action perspective for the public. They don’t say or write what HQ100 means for everyday life, e.g. “If you live in Zone X and the water level reaches Y, please go to Location Z,” or “If you hear this, it means the water will arrive in 6 hours.” Here too, communication remains technical and abstract, rather than action-relevant and emotionally resonant.

A “flood translation hub” could act as a translation layer between technical language and everyday logic. The goal is to make early warning systems not only technically available, but also semantically understandable and relevant for action. A GeoTIFF with ground subsidence is certainly a valuable product for a GIS analyst. For a local official in a small town or village, it is only relevant if it shows, tells and explains what it means for their lives.

Semantic clarity is systemically important and a semantic bridge is needed here between technical terms and real life!

- Semantics & Space Travel – Early Warning Made Understandable

- Semantic Bridge: From Technical Term to Real Life

- Last Mile Simulator

This article was written by Birgit Bortoluzzi, creative founder of the “University of Hope” – an independent knowledge platform for resilience, education, and compassion in a complex world. (created: September 12, 2025)